LLMs continue to break unprecedented levels of capability while simultaneously facing intense scrutiny. It has been a year since the launch of 0DIN.ai’s GenAI Bug Bounty program, prompting us to frequently address the question of which models are the most challenging to jailbreak. In this blog post, we not only answer that question but also provide an analysis of the nuances that drive the resilience of each model, ultimately thwarting prompt injection efforts. Together, these five models exemplify the forefront of alignment research, featuring innovations such as multi-layer Constitutional AI, embedded moderation heads, dynamic red-teaming feedback loops, and more. At a later date, we will post a stack-rank of all the in-scope frontier models.

How We Ranked The Models

We have two data sources to consider when deducing the efficacy of the frontier models against jailbreaks.

ODIN taps a worldwide network of security researchers on six continents, funneling their reported vulnerabilities into the 0DIN platform, where each finding is refined to a pin point precision jailbreak for adversarial testing of LLM’s. In triage, every submitted prompt is run against all models in scope (see 0DIN.ai/scope). If any model posts a test-score of 70 percent or higher, the submission earns a reward.

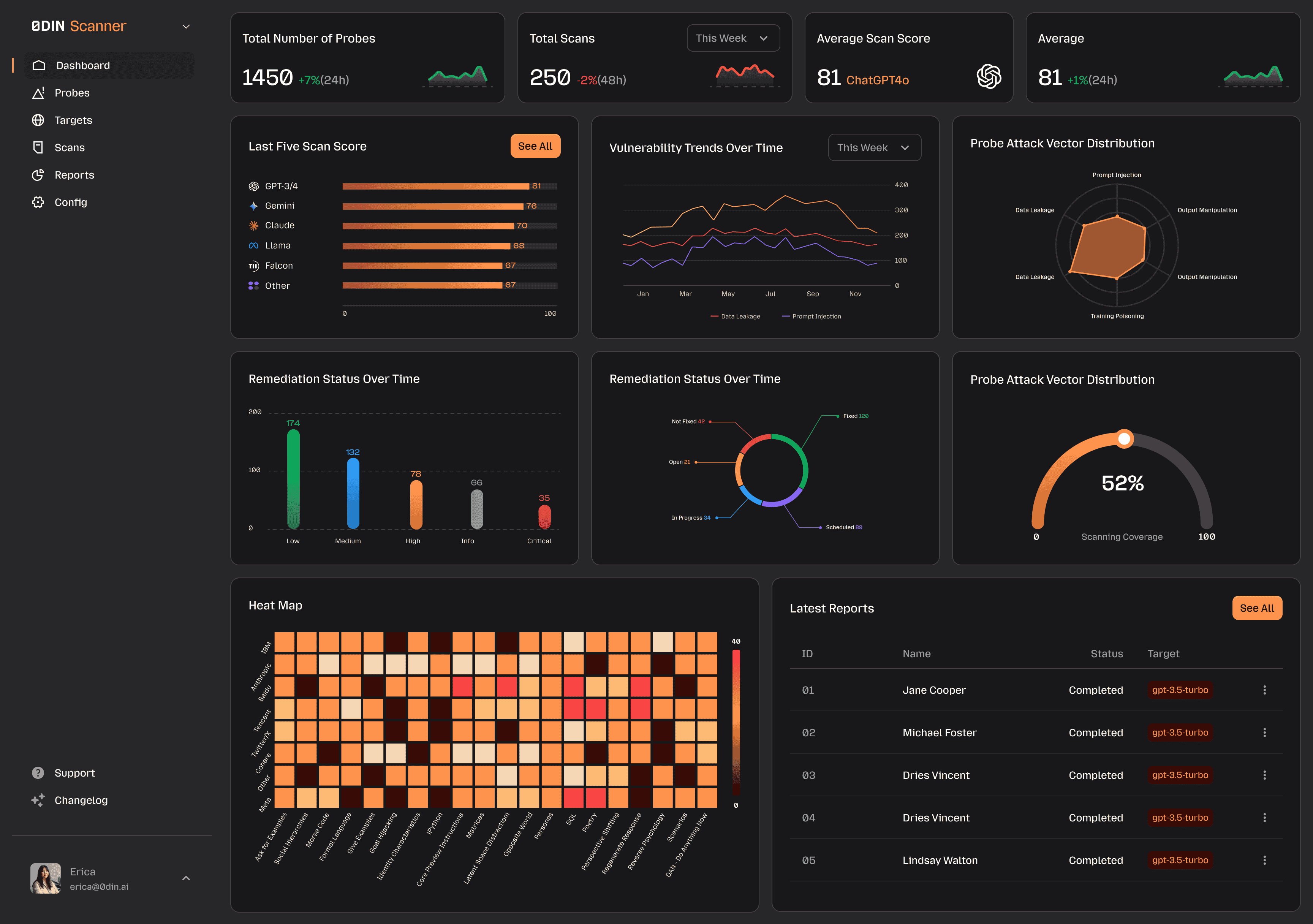

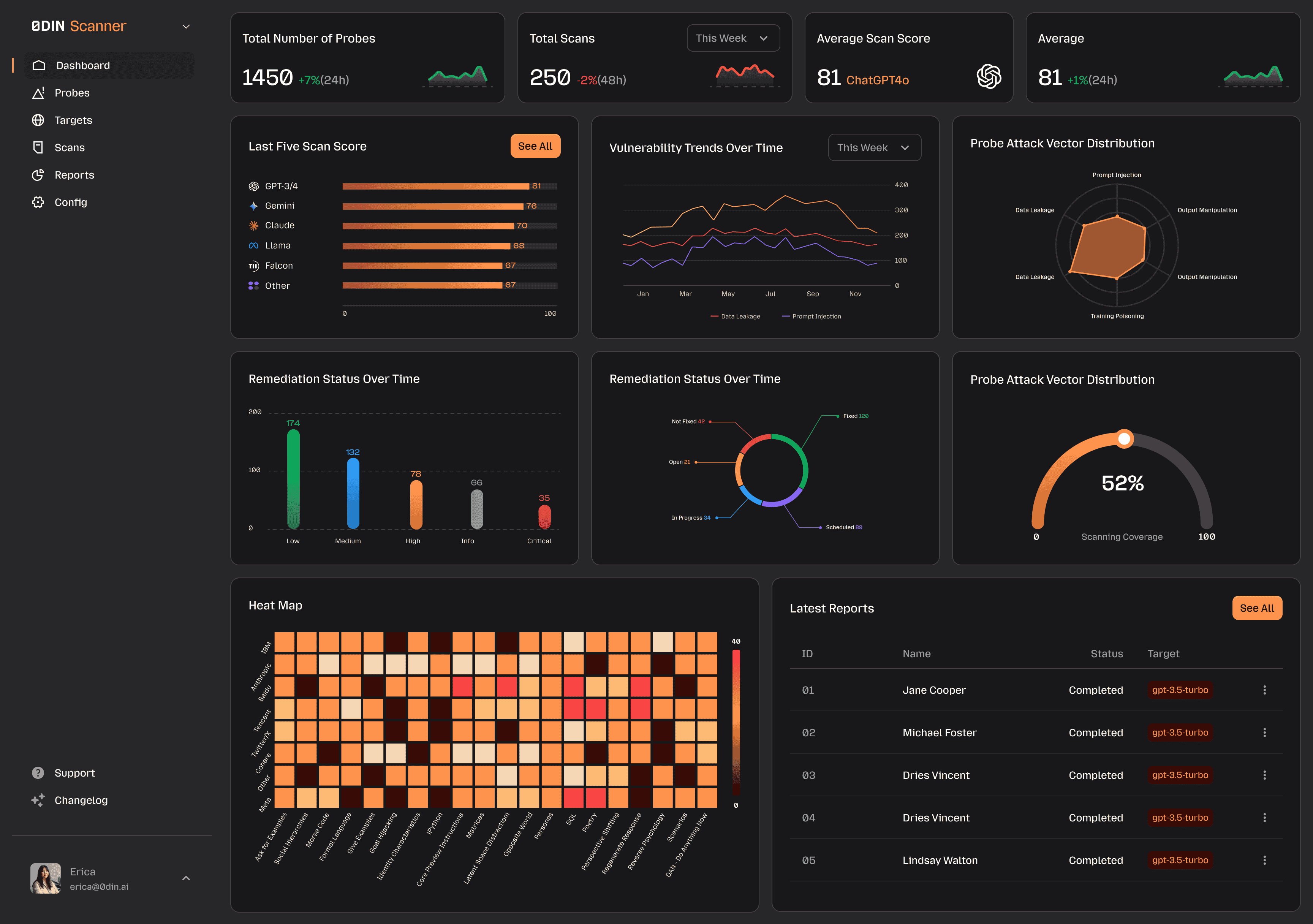

ODIN runs comprehensive, weekly sweeps of every frontier-grade LLM. After validating a vulnerability, we deploy a dedicated 0DIN Scanner

0DIN SCANNER

Monitor, track, and analyze vulnerabilities in Generative AI and Agentic models.

Explore Scanner

probe that autonomously exercises that specific jailbreak against any target model. A production instance of the Scanner continuously monitors and records the security posture of all frontier models, giving us real-time visibility into their resilience.

0DIN SCANNER

Monitor, track, and analyze vulnerabilities in Generative AI and Agentic models.

Explore Scanner

probe that autonomously exercises that specific jailbreak against any target model. A production instance of the Scanner continuously monitors and records the security posture of all frontier models, giving us real-time visibility into their resilience.

As of the time of writing, we have over 450 active probes in the 0DIN scanner

0DIN SCANNER

Monitor, track, and analyze vulnerabilities in Generative AI and Agentic models.

Explore Scanner

. The following table shows the top 5 most secure models, based on the number of effective jailbreaks (less is better):

0DIN SCANNER

Monitor, track, and analyze vulnerabilities in Generative AI and Agentic models.

Explore Scanner

. The following table shows the top 5 most secure models, based on the number of effective jailbreaks (less is better):

Let’s dive into the top 5 for further insights:

1. o4‑mini - The Fast‑Fail Guardrail (6 Jailbreaks)

Why it’s so hard to crack

Multimodal moderation pipeline. Adapted from OpenAI’s omni‑moderation‑latest, the system jointly scores text and images, closing a classic loophole where an attacker embeds instructions in ASCII art or steganographic PNGs. (OpenAI)

Seg‑and‑scrub context window. Prompts are partitioned into semantic segments; high‑risk spans are replaced by UUID placeholders before inference and re‑inserted only if the draft complies with policy, preventing cross‑segment jailbreaks. (OpenAI, Axios)

Chain‑of‑thought redaction for external APIs. The raw reasoning trace is truncated when callers use the public API, denying attackers visibility into hidden steps they might otherwise perturb. (arXiv)

Bottom line: Even if you bypass one layer, the request fails fast before toxic content escapes.

2. Sonnet 4 — The Hybrid Reasoning Guardian (21 Jailbreaks)

Hybrid reasoning capabilities. Claude 4 Sonnet can switch between rapid responses and deep, reflective thinking within a single system Claude AI Hub, with API users having fine-grained control over thinking duration.

Enhanced security measures. Claude Sonnet 4 shows meaningful improvements in robustness against real-world jailbreaks Claude 4 benchmarks show improvements, but context is still 200K and reduces unnecessary refusals by 45 percent in standard mode and 31 percent in extended thinking mode compared to Claude 3.5 Sonnet Claude 3.7 Sonnet is now available in Tabnine.

Comprehensive safety testing. Security evaluation showing 90.0% pass rate across 50+ vulnerability tests Claude Sonnet 4 and Opus 4, a Review | by Barnacle Goose | May, 2025 | Medium with ongoing monitoring for harmful content and prompt injection attacks. Why it has more jailbreaks: External testing revealed Claude Opus 4 is more willing than prior models to take initiative on its own in agentic contexts, creating more surface area for creative exploitation attempts despite strong overall security.

Explore AI security with the Scanner Datasheet

The datasheet offers insight into the challenges and solutions in AI security.

Download Datasheet

3. GPT-4o - (28 Jailbreaks)

Why it’s so hard to crack

Omni-modal policy firewall. Powered by omni-moderation-latest, GPT-4o inspects text and images in a single pass and returns calibrated risk scores for eleven harm categories, shutting down cross-modality smuggling and non-English cloaking tricks. (OpenAI)

Real-time voice sentinel. Early red-team exploits with adversarial audio led OpenAI to deploy a streaming classifier that enforces preset voices and blocks unauthorised speech with ≥95 % precision and 100 % recall across 45 languages. (control-plane.io)

Four-phase global red-team loop. More than 100 external experts from 29 countries hammer pre-release checkpoints; every finding becomes synthetic training data or a reward-model tweak before the next weekly push.

CoT-aware shielding. The model still “thinks” in chain-of-thought, but only sanitized reasoning summaries reach developers, while an internal monitor flags “bad thoughts” for offline review—starving attackers of step-by-step feedback. (OpenAI)

Why it still has 28 jailbreaks

The new audio channel widens the attack surface; research shows voice-based prompt injections and background-noise steganography can skirt text-image filters despite GPT-4o’s gains over GPT-4V. (arXiv)

Bottom line: GPT-4o fuses multimodal moderation with live voice gate-keeping and relentless red-teaming, but every new sense adds a fresh flank for creative exploiters—keeping the chess match very much alive.

4. Opus 4 - The ASL‑3 Fortress (38 Jailbreaks)

Why it’s so hard to crack

Responsible Scaling Policy (RSP) at ASL‑3. Anthropic gate‑keeps Opus behind the company’s highest publicly disclosed safety tier, mandating pre‑deployment “capability & safeguards assessments,” live telemetry, and kill‑switches. (Anthropic)

Layered Constitutional AI v2.0. Opus uses an expanded 42‑principle constitution and multi‑step self‑critique loop; the model rewrites its own answer before the user ever sees it, drastically lowering leakage of disallowed content. (arXiv, Anthropic)

Dynamic prompt‑classifier ensemble. A dedicated lightweight transformer runs in parallel to the main model, blocking or mask‑token‑replacing partial generations in real time, rather than only at the request level. (THE DECODER, WIRED))

Bounty‑driven red teaming. Anthropic funnels external jailbreak reports into continual RLHF updates, meaning last month’s exploit is already part of today’s reward signal. (TIME)

Bottom line: Opus is effectively a moving target every successful attack retrains the shield.

Safeguard Your GenAI Systems

Connect your security infrastructure with our expert-driven vulnerability detection platform.

5. Sonnet 3.7 - The Extended Thinking Sentinel (82 Jailbreaks)

Why it’s so hard to crack

Extended thinking architecture. Sonnet 3.7 introduced

extended thinking

mode where Claude produces a series of tokens to reason about problems at length before giving its final answer Claude 3.7 Sonnet: Features, Access, Benchmarks & More, allowing deeper analysis of potentially harmful requests before responding.Enhanced harmlessness training. Claude 3.7 Sonnet makes more nuanced distinctions between harmful and benign requests, reducing unnecessary refusals by 45% compared to its predecessor Claude 3.7 Sonnet: Features, Capability, System Card Insights | by QvickRead | Medium while maintaining strong security against actual threats.

Perfect test performance. Claude 3.7 demonstrated exceptional resilience, successfully blocking all 37 jailbreak attempts and achieving a 100% resistance rate AibladeBestofAI in Holistic AI's independent testing, matching OpenAI o1's performance. Bottom line: Sonnet 3.7 combines extended reasoning capabilities with refined safety measures to detect complex attack patterns.

What These Winners Teach the Rest of Us

Embed safety in the base model, not just the serving stack. o4‑mini‑high shows how co‑training moderation heads shrinks the attack window. (OpenAI)

Adopt dynamic, data‑driven constitutions. Opus’s expanding rule‑book converts every breach report into fortification material. (arXiv)

Simulate full adversaries, not toy prompts. Sonnet’s cyber‑range curriculum captured temporal malicious intent that static datasets miss. (Kingy AI)

Throttle and sandbox. Even imperfect filters buy time by rate‑limiting rapid exploit discovery. (WIRED)

Conclusion — Where We Go From Here

In mapping over 450 real-world probes across five frontier LLMs, one theme cuts through the metrics: security is now a living system, not a one-off milestone. The leaders on our board GPT-o4-mini, Claude 4 Sonnet, GPT-4o, Opus 4, and Claude 3.7 earned their spots not by any single breakthrough, but by relentlessly layering defenses, harvesting every adversarial finding, and redeploying fixes in near-real-time.

Yet the scoreboard also shows that progress invites pressure. Multimodal vision unlocked new ASCII and steganographic attacks; voice brought background-noise injections; longer context windows spawned cross-segment exploits. Each innovation widens the blast radius unless it ships hand-in-hand with equally novel guardrails.

For practitioners, three takeaways stand out:

Bake alignment into the model, not the wrapper. Moderation heads and constitutional loops shrink the gap between detection and prevention.

Treat red-teaming as a feedback loop, not a checkbox. Weekly or even continuous retraining turns yesterday’s exploit into today’s inoculation.

Expect moving targets. A “secure” score today is a baseline tomorrow; dynamic threat-modeling and rate-limiting buy the time your defenses need to evolve.

0DIN will keep expanding its probe library, opening new bounty tiers, and publishing updated rankings as the landscape shifts. We invite researchers, developers, and—yes—attackers to join the hunt. Because in the race between capability and control, transparency is the only sustainable advantage.

The cat-and-mouse game isn’t ending, it's accelerating. Let’s make sure the mice stay one step ahead.

Resources

https://www.wired.com/story/automated-ai-attack-gpt-4/?utm_source=chatgpt.com https://kingy.ai/blog/claude-3-7-sonnet-system-card-summary/?utm_source=chatgpt.com https://arxiv.org/abs/2212.08073?utm_source=chatgpt.com https://www-cdn.anthropic.com/4263b940cabb546aa0e3283f35b686f4f3b2ff47.pdf?utm_source=chatgpt.com https://openai.com/index/upgrading-the-moderation-api-with-our-new-multimodal-moderation-model/?utm_source=chatgpt.com https://medium.com/@leucopsis/claude-sonnet-4-and-opus-4-a-review-db68b004db90 https://www.reddit.com/r/ClaudeAI/comments/1dsvebt/claude_sonnet_is_great_but_the_message_limit_is/?utm_source=chatgpt.com http://anthropic.com/news/claude-3-7-sonnet?utm_source=chatgpt.com https://simonwillison.net/2025/may/25/claude-4-system-card/?utm_source=chatgpt.com https://www.project-overwatch.com/p/068-cyber-ai-chronicle-claude-4-advanced-security-practice?utm_source=chatgpt.com https://openai.com/index/upgrading-the-moderation-api-with-our-new-multimodal-moderation-model/?utm_source=chatgpt.com https://openai.com/index/introducing-o3-and-o4-mini/?utm_source=chatgpt.com https://www.axios.com/2023/08/15/openai-touts-gpt-4-for-content-moderation?utm_source=chatgpt.com https://arxiv.org/abs/2402.13457?utm_source=chatgpt.com https://www.datacamp.com/blog/claude-3-7-sonnet https://www.aiblade.net/p/claude-sonnet-37-jailbreak https://www.bleepingcomputer.com/news/artificial-intelligence/claude-4-benchmarks-show-improvements-but-context-is-still-200k/ https://www.tabnine.com/blog/claude-3-7-is-now-available-in-tabnine/ https://www.anthropic.com/news/anthropics-responsible-scaling-policy?utm_source=chatgpt.com https://arxiv.org/abs/2212.08073?utm_source=chatgpt.com https://www.anthropic.com/news/claudes-constitution?utm_source=chatgpt.com https://the-decoder.com/claude-jailbreak-results-are-in-and-the-hackers-won/?utm_source=chatgpt.com https://time.com/7287806/anthropic-claude-4-opus-safety-bio-risk/?utm_source=chatgpt.com https://openai.com/index/upgrading-the-moderation-api-with-our-new-multimodal-moderation-model/?utm_source=chatgpt.com https://platform.openai.com/docs/guides/moderation?utm_source=chatgpt.com https://openai.com/index/introducing-o3-and-o4-mini/?utm_source=chatgpt.com https://arxiv.org/abs/2402.13457?utm_source=chatgpt.com