BL0G

11 min read

February 19, 2026

Your AI Agent Has a Memory Problem: Rewrite the Past, Own the Agent

5 min read

December 24, 2025

Twas the Night Before Jailbreaks: Introducing 0DIN Sidekick

3 min read

December 23, 2025

Agent 0DIN: A Gamified CTF for Breaking AI Systems

4 min read

December 22, 2025

Introducing Achievements on 0DIN.ai

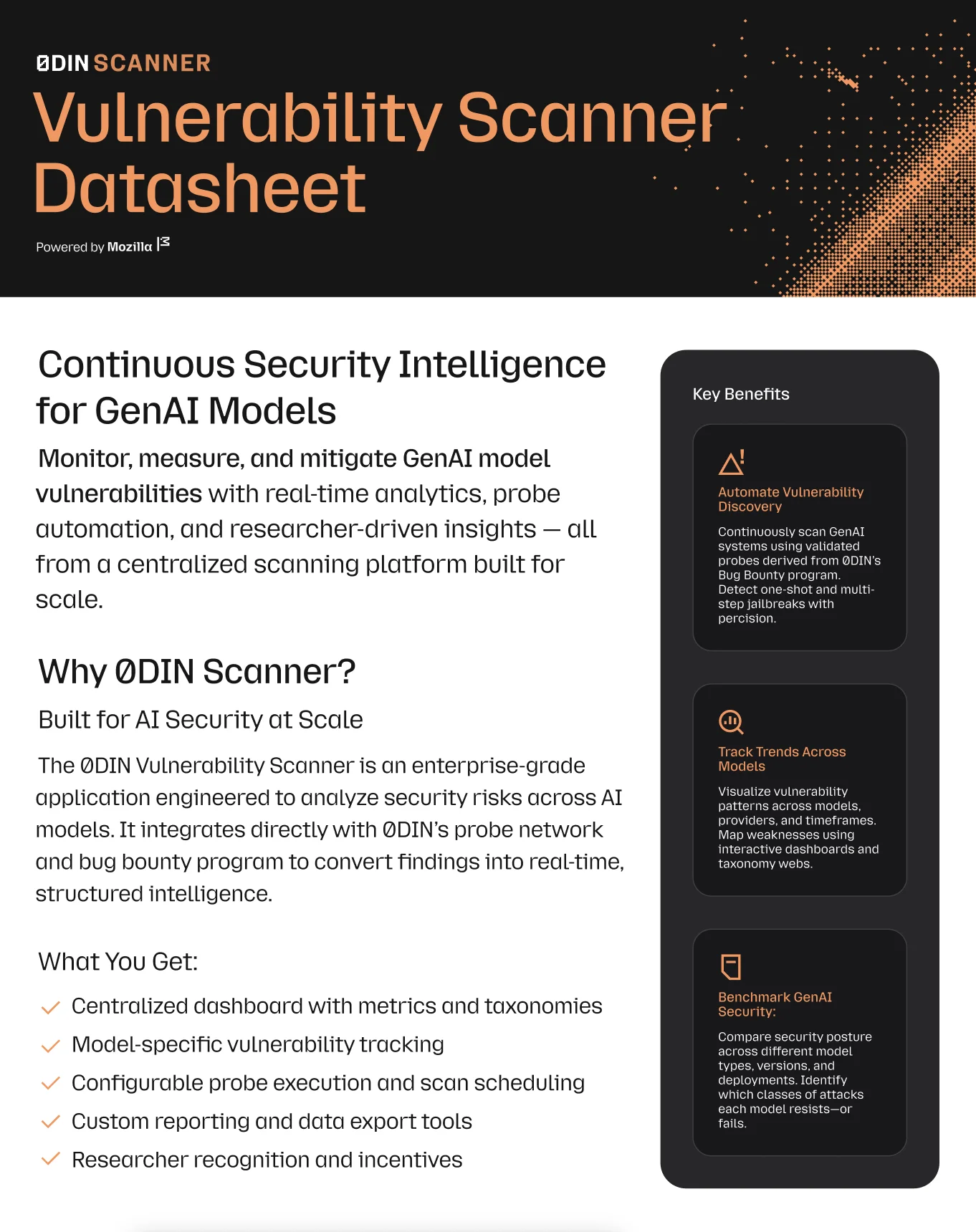

Explore AI security with the Scanner Datasheet

The datasheet offers insight into the challenges and solutions in AI security.

Download Datasheet

Our articles

Secure People, Secure World.

Discover how 0DIN helps organizations identify and mitigate GenAI security risks before they become threats.

Request a demo